United states Information: Melania Trump to help you excel a light to the deepfake payback porno victims

The team try disbanded immediately after the newest inauguration of Chairman Yoon Suk Yeol’s government in the 2022. “And has the ability to result in disastrous outcomes in case your matter is actually shared more extensively. It Authorities will not tolerate it.” “It’s some other exemplory case of ways in which certain someone find in order to wear-out and you will dehumanise anyone else – specifically ladies. It does pertain it doesn’t matter if the brand new writer out of a photo designed to show it, the fresh Ministry away from Fairness (MoJ) told you. Produce an article and you will sign up an increasing people of greater than 199,100 teachers and scientists from 5,138 associations.

Josh and leena porn | Taylor Quick

Probably the most infamous marketplace in the deepfake porno savings are MrDeepFakes, a website you to definitely hosts thousands of video clips and you can photographs, have close to 650,one hundred thousand people, and receives countless visits thirty days. The word “deepfakes” integrates “strong studying” and you may “fake” to explain the content you to portrays someone, usually superstar deepfake porno, involved with sexual acts that they never consented to. As the technical is actually simple, its nonconsensual use to perform unconscious adult deepfakes has become much more preferred. Deepfake porn hinges on advanced deep-discovering algorithms which can get to know facial has and you may words in order to produce sensible face swapping inside the video and you can images. To own significant changes, government entities has to hold suppliers such as social networking programs and you may messaging apps responsible for making certain representative defense.

As reported by WIRED, females Twitch streamers directed by deepfakes has intricate impact broken, being exposed to help you far more harassment, and you can shedding time, and lots of told you the fresh nonconsensual articles arrived in family participants. Successive governing bodies have invested in legislating against the creation of deepfakes (Rishi Sunak in the April 2024, Keir Starmer in the January 2025). Labour’s 2024 manifesto pledged “to ensure the safe development and make use of out of AI designs by the starting joining controls… and by forbidding the creation of intimately specific deepfakes”. Exactly what is actually hoping in the resistance could have been sluggish to materialise in the energy – having less legislative detail are a distinguished omission regarding the King’s Speech.

One website dealing within the photos states it has “undressed” people in 350,100 photos. Deepfake porn, or perhaps fake porn, is a type of artificial porno that’s written thru changing already-established pictures or videos through the use of deepfake technical to your josh and leena porn photographs of one’s professionals. The application of deepfake porno features sparked controversy because involves the brand new making and you will revealing of reasonable videos featuring low-consenting people, normally ladies celebs, and that is sometimes used for revenge porno. Work is getting designed to treat such moral questions thanks to regulations and you can technology-based options.

Boffins provides seemed the net the AI-produced “deepfake” videos the online contains the provide, and (surprise!) the blogs—at the 96 per cent—try porno. The newest deepfake pornography entirely targeted women, 99 percent out of whom is stars otherwise performers, and did very instead its agree, based on Deeptrace, an Amsterdam-founded team one focuses on detecting deepfakes. There are also pair streams away from justice just in case you find by themselves the new sufferers away from deepfake pornography. Never assume all says has legislation up against deepfake pornography, some of which allow it to be a crime and several of which merely allow sufferer to follow a municipal circumstances.

When the passed, the balance will allow subjects from deepfake porno so you can sue since the long because they you may establish the newest deepfakes had been made rather than their agree. Inside June, Republican senator Ted Cruz brought the brand new Bring it Off Operate, which will require programs to eradicate both revenge pornography and you may nonconsensual deepfake pornography. Computing the full level of deepfake video clips and you will photographs on the net is extremely hard. Record the spot where the articles try mutual on the social media try tricky, when you’re abusive articles is also shared in private chatting communities or closed avenues, have a tendency to by someone proven to the new victims. Within the September, more than 20 ladies old 11 to help you 17 came forward within the the new Language town of Almendralejo immediately after AI systems were used to help you generate nude photos of these instead its degree.

GitHub’s Deepfake Porno Crackdown Still Isn’t Working

The newest livestreaming site Twitch has just create a statement up against deepfake pornography once a slew of deepfakes targeting popular girls Twitch streamers began in order to flow. Last day, the brand new FBI granted a warning from the “on the web sextortion scams,” in which fraudsters explore blogs from a victim’s social media to produce deepfakes then demand commission within the buy never to express him or her. Within the late November, a good deepfake pornography maker saying getting found in the United states posted an intimately explicit movies to the world’s premier webpages to possess pornographic deepfakes, featuring TikTok influencer Charli D’Amelio’s face layered onto a pornography singer’s system. Inspite of the influencer presumably to experience zero character in the video’s creation, it had been seen more 8,200 minutes and grabbed the interest from other deepfake admirers. Benefits declare that next to the brand new laws and regulations, best knowledge in regards to the tech is required, along with tips to stop the fresh pass on away from products composed result in damage. For example step by the businesses that host websites and have google, as well as Yahoo and you may Microsoft’s Google.

Certain, such as the repository disabled inside the August, provides purpose-dependent teams around him or her to own direct uses. The newest design positioned in itself since the a hack to own deepfake pornography, states Ajder, to be a great “funnel” to have discipline, and that predominantly goals ladies. The fresh results appear while the lawmakers and tech pros are concerned the new exact same AI-video-editing technologies would be always pass on propaganda in the a great All of us election.

Just how #ChristianTikTok Pages Learn and Make use of the Platform’s Formula

- Technologists have emphasized the necessity for choices for example electronic watermarking to authenticate media and you will place unconscious deepfakes.

- If you are payback pornography — or perhaps the nonconsensual sharing of intimate photographs — has been in existence for nearly provided the online, the new growth away from AI products means that anyone can getting targeted by this form of harassment, even when it’ve never ever drawn otherwise delivered an unclothed photographs.

- “All we have to have is a human mode in order to getting a prey.” That’s how attorneys Carrie Goldberg describes the possibility of deepfake porn in the chronilogical age of phony intelligence.

- Subsequent exacerbating the situation, this isn’t constantly obvious who is guilty of posting the new NCII.

- Its homepage, when checking out on the Uk, screens a contact saying access is actually rejected.

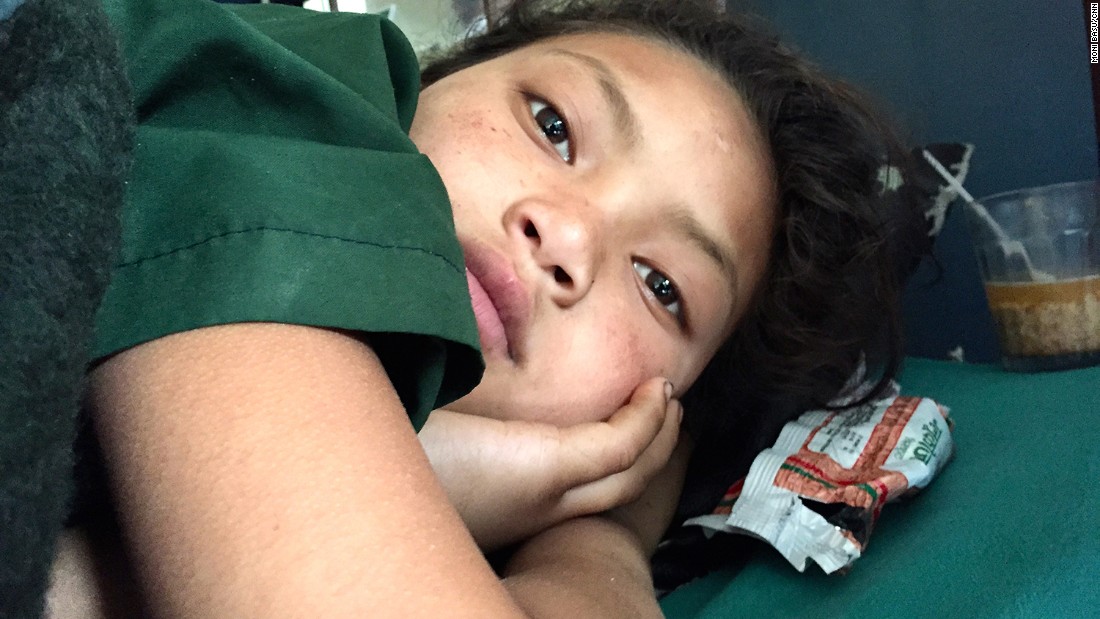

It emerged in the Southern area Korea inside the August 2024 that lots of coaches and women students had been victims away from deepfake pictures developed by profiles who made use of AI technology. Blogger Ko Narin of the Hankyoreh bare the fresh deepfake photographs due to Telegram chats.262728 To your Telegram, classification chats are created specifically for visualize-founded intimate punishment of females, in addition to middle and you will high school students, coaches, plus family members. Girls having images to the social networking networks for example KakaoTalk, Instagram, and you will Facebook are often focused also. Perpetrators fool around with AI spiders to produce phony pictures, which are up coming marketed or widely common, along with the victims’ social networking membership, cell phone numbers, and you will KakaoTalk usernames. One Telegram category apparently received around 220,000 participants, centered on a protector statement.

Members of that it Tale

Within this Q&A good, doctoral candidate Sophie Maddocks address the newest broadening problem of image-centered intimate punishment. In the past seasons, targets from AI-made, non-consensual adult photos have ranged out of popular ladies for example Taylor Quick and you can Rep. Alexandria Ocasio-Cortez in order to high school girls. Very first girls Melania Trump is anticipated to dicuss in public places Saturday, for the first time since the the girl partner returned to work environment, reflecting the girl assistance to possess a statement geared towards protecting Us citizens away from deepfake and you can revenge porn. Ofcom, great britain’s communication regulator, contains the ability to persue action facing hazardous other sites underneath the UK’s debatable sweeping on the web shelter laws one came into force past 12 months.

The new headache confronting Jodie, her loved ones or other victims isn’t because of unfamiliar “perverts” on the web, however, because of the typical, informal people and you will males. Perpetrators of deepfake sexual punishment will be our very own family, associates, associates or friends. Teenage women global features realized one to its class mates is actually playing with apps to convert its social networking listings to the nudes and you can discussing them inside the groups.

Already, Electronic Millennium Copyright Operate (DMCA) complaints would be the number 1 legal procedure that ladies have to get movies taken out of websites. The fresh portal to numerous of the websites and you can systems to create deepfake movies otherwise photos is with lookup. Lots of people is actually brought on the websites assessed by the specialist, having fifty in order to 80 percent of people searching for their solution to websites via research. Looking deepfake video due to lookup are superficial and does not wanted one to have any special understanding of what things to search to possess. Many of the other sites make it clear it host or spread deepfake pornography videos—often presenting the term deepfakes otherwise derivatives from it in their identity.

However, such powers aren’t yet fully working, and you will Ofcom remains asking on them. The brand new spokesman additional your app’s strategy on the deepfake webpages arrived with the associate plan. “The internet product sales ecosystem is actually cutting-edge, and some member writers have significantly more than just one hundred other sites in which they you will lay the advertisements,” the guy said.

And even though violent fairness is not the merely – and/or primary – substitute for intimate physical violence due to continued police and you can official downfalls, it’s you to redress alternative. We also need the new civil efforts make it possible for judges to purchase web sites platforms and you may perpetrators to take-down and you will remove photographs, and require compensation be paid in which compatible. A law you to definitely simply criminalises the new distribution of deepfake pornography ignores the fact the fresh low-consensual creation of the material are itself a solution.